Wunderman Thompson has developed technology to analyse how consumers emotionally respond to an ad and which elements of a video hold their attention, so clients can tweak their campaigns to elicit a desired response.

The solution, called Reveal, combines traditional creative testing techniques with proprietary and licensed technology.

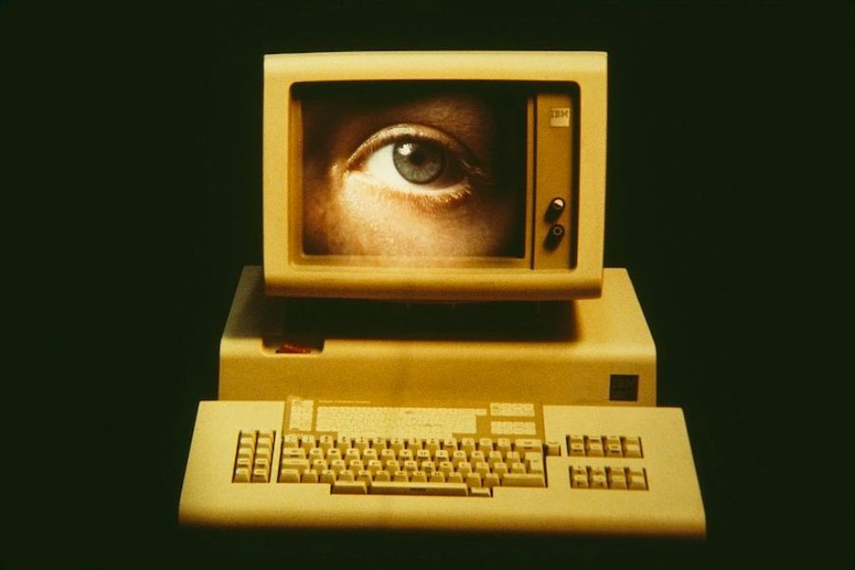

Clients select a group of consumers, provided by panel providers, that match their target audience. This panel will then opt-in to have their faces recorded “for a couple of minutes” as they watch the client’s video ad—similar to how measurement providers measure audience attention levels.

Wunderman Thompson’s machine learning model then analyses the recording for seven micro expressions: Anger, disgust, fear, happy, neutral, sad and surprise. The agency has also licensed technology that tracks consumers’ eyes as they focus on specific objects within a video.

Face tracking is combined with feedback from a consumer survey. The process takes about a week, Wunderman Thompson said. After the analysis is complete, the video recordings are deleted.

“Our promise is that we are faster [than other creative testing] because we've got all this automation,” said Lucile Ripa, head of analytics and data science at Wunderman Thompson Data. “So it will not take months and months to build a big report full of KPIs, we want to be much more agile and dynamic to be able to test our clients’ creative at multiple points—not only when it's completely final.”

The agency believes this will result in more effective ads as well as a better consumer experience.

“Essentially, we’re just trying to measure positive emotions so that ultimately we get more positive emotions from consumers seeing the ads,” said David Lloyd, EMEA managing director of data at Wunderman Thompson.

The tool took about eight months to create. Wunderman Thompson said it decided to develop a proprietary emotions detection model because existing models, such as those within Google’s Cloud Vision API, were limited to macro expressions.

“When we were using this [Google’s] model we ended up with 19% neutral expression, because this model is based on people being very smiley or very sad. So it was not at all fit for purpose, because when you watch a piece of advertising, you could laugh, but usually you've got very small movement in your face,” said Ripa.

The agency said it also had to train the model to take into account how men and women express emotions differently.

Wunderman Thompson said it has lined up trials of the solution with some of its “major” clients, including one in travel and leisure, over the coming weeks and months.

Lloyd said the agency is selective about which products it chooses to develop in-house “in part because we know there are enormous organizations out there that are probably much more purist.”

“We know we’re not going to be able to compete with the likes of Google and Microsoft in terms of their investment in data science and technology. So we have a lot of focus around what we do and what we don't do,” he said.

He believes Wunderman Thompson’s advantage over tech vendors is that it is a user of technology as much as a developer.

“We know when there's a need or opportunity because we live the gap that we see in the work we’re doing for our clients,” Lloyd said.

(This article first appeared on CampaignLive.com)

.jpg&h=334&w=500&q=100&v=20250320&c=1)

.jpg&h=334&w=500&q=100&v=20250320&c=1)

.jpg&h=334&w=500&q=100&v=20250320&c=1)

.jpg&h=334&w=500&q=100&v=20250320&c=1)

.jpg&h=334&w=500&q=100&v=20250320&c=1)

.jpg&h=268&w=401&q=100&v=20250320&c=1)

.jpg&h=268&w=401&q=100&v=20250320&c=1)

.jpg&h=268&w=401&q=100&v=20250320&c=1)